Creating a NestJS application (Part II)

By Joao Paulo Lindgren

Part II

- Add Persistence with TypeORM

- Add Configuration Service

- Add Docker and Docker Compose

- Add Elastic Search

- References:

In part I of this series in our NestJs application we created a post entity, a post services, a couple of endpoints to manage it, and finally the authentication using google OAuth. While it was a lot of work, we are far from having a sample useful application. We are still missing a core part of most of the web applications, the data persistence. In this part, we will make the persistence of our data using a library called TypeORM and the postgres database. Because some sensitive configuration parameters like username and password are needed by the application, the next section will be dedicated to the configuration of the application. How can we remove these hardcoded parameters from our application and how we should deal with them? As a result, at this point, we should have a small functional sample application. We have entities, validation, endpoints, authentication, persistence, and configuration. In addition to this, in the following steps, I´ll show how to dockerize our application and add elastic search to index the posts, making it easier to search and provide a suggestion about related content. In the future, I´ll do a part III of this series implementing a frontend in react to use our backend.

Add Persistence with TypeORM

For this application, the Postgres will be the chosen database. Feel free to choose another one of your preference. We are going to use a library called TypeORM and accordingly with their documentation they support a lot of databases, like mysql, mariadb, mssql, mongodb and others. The TypeORM as the name implies is an Object-relational mapping tool and you probably know what it is. But in case you are completely lost, in short, it is a technique that maps the relational world of our database tables, to the object world of our application represented by the entities and its associations. We are not needed to write any SQL (although we can for custom complex scenarios or optimizations), to save, delete, and query our entities. The only work is to configure how will be our mappings and the library will do the dirty part of the job under the hoods, generating the queries, managing transactions, etc. TypeORM can work with Repository pattern or ActiveRecord pattern. Again, if you have no clue what are these patterns, I suggest you do a quick look on the links, or if you can do better, read the book Patterns of Enterprise Application Architecture. For this demo, I am choosing the ActiveRecord way, firstly because I think it is more suited for small applications, but also for a matter of preference. I will make an assumption that you already have your database installed and configured, but if you don’t, just follow the guide and wait until the docker section where we will use a Postgres image to create a container with our database. No database installation on your machine will be needed from then on.

First lets install typeorm dependencies and the postgres drive.

PS D:\projects\bloggering> npm i --save @nestjs/typeorm typeorm postgres

Next, import and configure the TypeOrmModule in the AppModule (the root module of the system).

bloggering/src/app.module.ts

1import { Module } from "@nestjs/common";

2import { AppController } from "./app.controller";

3import { AppService } from "./app.service";

4import { PostsModule } from "./posts/posts.module";

5import { AuthModule } from "./auth/auth.module";

6import { TypeOrmModule } from "@nestjs/typeorm";

7

8@Module({

9 imports: [

10 PostsModule,

11 AuthModule,

12 TypeOrmModule.forRoot({

13 type: "postgres",

14 host: "localhost",

15 port: 5432,

16 username: "{username}",

17 password: "{password}",

18 database: "bloggering",

19 entities: ["dist/**/*.entity.js"],

20 synchronize: true,

21 }),

22 ],

23 controllers: [AppController],

24 providers: [AppService],

25})

26export class AppModule {}

Later we will remove these sensitive parameters from the code and inject it into the application. Also, it should be noted that we imported the TypeOrmModule in the root module of the application, hence we can inject the TypeORM objects through the entire without import any other module.

In the entities option, we used a glob pattern to get all our entities on the project. So every time we add one new entity, it ill not be necessary to do any changes to this option.

The last thing to notice in this configuration, but one of the most important is about the synchronize: true. Enabling this option makes TypeORM auto-update your schema every time it changes, IT IS NOT RECOMMENDED to have this option in production for example because you can lose data. In a real project, you will probably work with migrations, I´ll not include it here because this series is getting bigger enough. Be aware that if you want to use TypeORM CLI to generate your migrations you should put your configurations into your .env or ormconfig.json file. But keep calm, in the next section we will move all of our config settings to a .env file and configure a ConfigService.

If you want to learn more about migrations there are some links below.

Official documentation https://typeorm.io/#/migrations It contains best practices to initialize the migrations in a new app. https://github.com/typeorm/typeorm/issues/2961 Some weird behavior to TypeORM CLI gets configurations from the env file. https://github.com/typeorm/typeorm/issues/4288

Next, add the mappings decorators to the entities and their properties. These decorators will be responsible to tell TypeORM how to map our objects to the database schema.

bloggering/src/users/user.entity.ts

1import {

2 BaseEntity,

3 Column,

4 Entity,

5 Unique,

6 CreateDateColumn,

7 UpdateDateColumn,

8 VersionColumn,

9 PrimaryGeneratedColumn,

10} from "typeorm";

11

12@Entity()

13@Unique(["email"])

14@Unique(["thirdPartyId"])

15export class User extends BaseEntity {

16 @PrimaryGeneratedColumn("uuid")

17 id: string;

18

19 @Column()

20 thirdPartyId: string;

21

22 @Column({ length: 100 })

23 name: string;

24

25 @Column({ length: 120 })

26 email: string;

27

28 @Column()

29 isActive: boolean;

30

31 @CreateDateColumn({ name: "created_at" })

32 createdAt!: Date;

33

34 @UpdateDateColumn({ name: "updated_at" })

35 UpdatedAt!: Date;

36

37 @VersionColumn()

38 version!: number;

39}

bloggering/src/posts/post.entity.ts

1import { IsNotEmpty } from "class-validator";

2import { Entity, Column, PrimaryGeneratedColumn, BaseEntity, ManyToOne } from "typeorm";

3import { User } from "src/users/user.entity";

4

5@Entity()

6export class Post extends BaseEntity {

7 constructor(author: User, title: string, content: string) {

8 super();

9 this.author = author;

10 this.title = title;

11 this.content = content;

12 }

13

14 @PrimaryGeneratedColumn("uuid")

15 id: string;

16

17 @ManyToOne((type) => User, { eager: true })

18 author: User;

19

20 @Column("varchar", { length: 255 })

21 @IsNotEmpty()

22 title: string;

23

24 @Column("text")

25 @IsNotEmpty()

26 content: string;

27}

The changes were for the most part simple stuff, we decorated the two entities with the @Entity() decorator to tell TypeORM that this is an entity. If we want, we can even customize the names of the table that will be generated passing a string as a parameter to this decorator. Subsequently, we decorated the entity’s properties with the self-explanatory @Column decorator and each specific column configuration.

In the Post entity the author property was changed from a string to be a real association with the User object. The @ManyToOne states that can be Many posts for a single user, and as an additional parameter, we set eager to true, which means that every time we query for a post, the User will be loaded along.

Additionally, we extended our entities from the BaseEntity class. This is only needed if you want to follow the ActiveRecord pattern, otherwise, you can create a repository to manage your entity, take a look here if this is your preference. In this case, because of the ActiveRecord, we will be able to call static methods in our class to manipulate data in the database.

To retrieve the active users for instance we can use:

const activeUsers = await User.find({ isActive: true });

You can take a look in all the methods of the BaseEntity class here

We are almost there! Now, just update the post.service.ts and the post.controller.ts to call our active records method to save and retrieve real data from the database and reflect our changes in the model.

After the changes the files should look like:

bloggering/src/posts/posts.service.ts

1import { Injectable, ForbiddenException } from "@nestjs/common";

2import { Post } from "./post.entity";

3import { User } from "src/users/user.entity";

4

5@Injectable()

6export class PostsService {

7 async createPost(title: string, author: User, content: string): Promise<any> {

8 const insertedPost = await Post.save(new Post(author, title, content));

9 return insertedPost.id;

10 }

11 getPosts = async (): Promise<Post[]> => await Post.find();

12 getSinglePost = async (id: string): Promise<Post> => await Post.findOneOrFail(id);

13}

bloggering/src/posts/posts.controller.ts

1import { Post as BlogPost } from "./post.entity";

2import { PostsService } from "./posts.service";

3import { Controller, Get, Param, Post, Body, UseGuards, Req } from "@nestjs/common";

4import { AuthGuard } from "@nestjs/passport/dist";

5

6@Controller("posts")

7export class PostsController {

8 constructor(private readonly postsService: PostsService) {}

9

10 @Get()

11 async findAll(): Promise<BlogPost[]> {

12 return await this.postsService.getPosts();

13 }

14

15 @Get(":id")

16 async find(@Param("id") postId: string): Promise<BlogPost> {

17 return await this.postsService.getSinglePost(postId);

18 }

19

20 @Post()

21 @UseGuards(AuthGuard("jwt"))

22 async create(@Body() newPost: BlogPost, @Req() req) {

23 const user = req["user"];

24 const newPostId = await this.postsService.createPost(newPost.title, user, newPost.content);

25 return { id: newPostId };

26 }

27}

In the PostsService we changed the methods to be async return promises and call the active records methods from our Post entity (because we extended BaseEntity remember?

Moreover, we had to do little changes to the PostsController as well. The methods were turned into async and the returns Promise<>. The create method passes the whole user instead of just the user name to the PostsService.

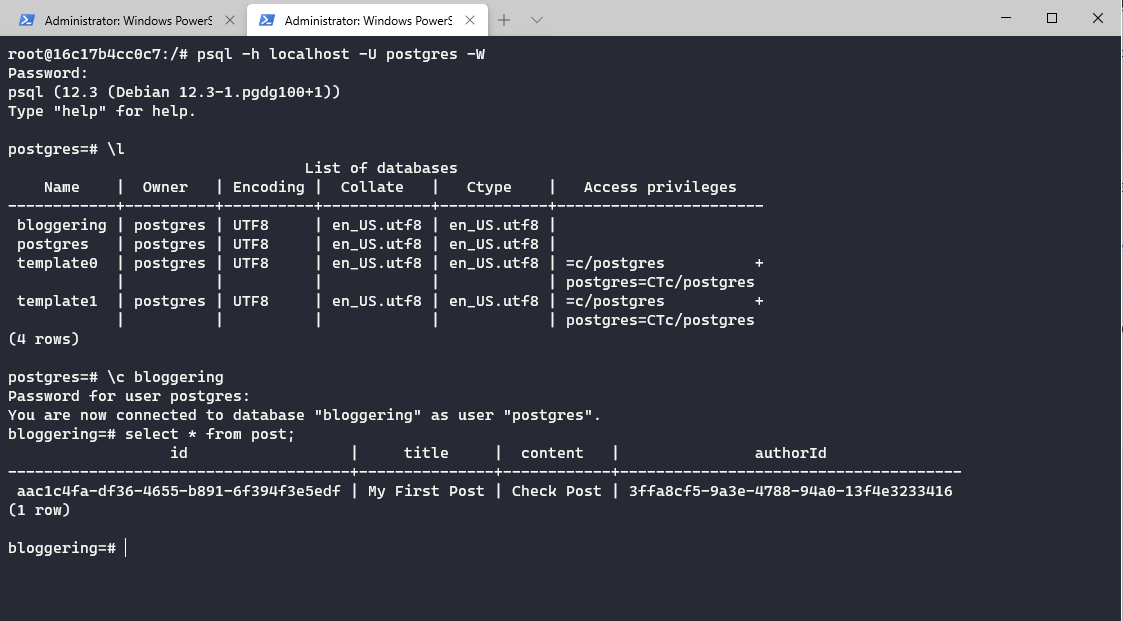

Now, create your database (we used in this tutorial the name bloggering), you can do it by hand or use a script. Later, when using docker it will be created automatically for us.

We are ready to test our app. If for some reason you need to debug and you are using VSCode, make sure to install ts-node locally (even if you have it globally installed) and add the following launch.json inside the .vscode folder on the root of your application. Usually, this file is not versioned and each developer can have its own debug setup.

bloggering/src/.vscode/launch.json

| { | |

| "configurations": [ | |

| { | |

| "type": "node", | |

| "request": "launch", | |

| "name": "Debug Nest App", | |

| "args": [ | |

| "src/main.ts" | |

| ], | |

| "runtimeArgs": [ | |

| "-r", | |

| "ts-node/register", | |

| "-r", | |

| "tsconfig-paths/register" | |

| ], | |

| "autoAttachChildProcesses": true | |

| } | |

| ] | |

| } |

Let’s try to create a new post to test what we´ve done.

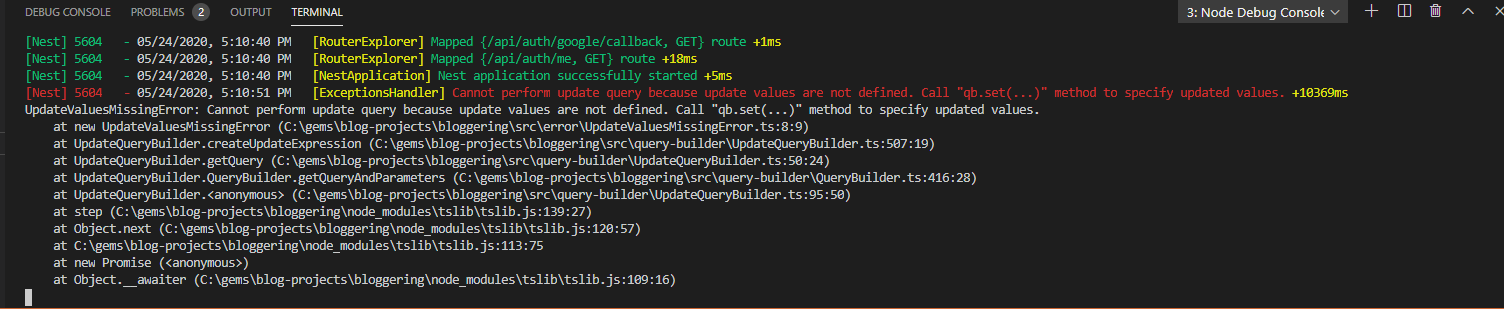

Oops… An error.

This error happened because we are setting the post with an author that is not persisted. Remember that we set in the post mapping relation between the

This error happened because we are setting the post with an author that is not persisted. Remember that we set in the post mapping relation between the post and user (author). So the TypeORM expects a created User as the right association. We are going to fix it putting a logic to create a user if it does not exist in the AuthService. In a more sophisticated application you will probably want to segregate this logic into a UserService but for us here, let’s keep it simple.

Change the validateOAuthLogin in the AuthService to be like this:

bloggering/src/auth/auth.service.ts

10async validateOAuthLogin(email: string, name: string, thirdPartyId: string, provider: string): Promise<string> {

11 try {

12 let user: User = await User.findByThirdPartyId(thirdPartyId);

13 if (!user) {

14 user = new User();

15 user.isActive = true;

16 user.email = email;

17 user.name = name;

18 user.thirdPartyId = thirdPartyId;

19 user = await User.save(user);

20 }

21 return this.jwtService.sign({

22 id: user.id,

23 provider

24 });

25 }

26 catch (err) {

27 throw new InternalServerErrorException('validateOAuthLogin', err.message);

28 }

29}

Along with the creation of the findByThirdPartyId method in the User entity.

bloggering/src/users/user.entity.ts

31static findByThirdPartyId(thirdPartyId: string): Promise<User> {

32 return this.createQueryBuilder("user")

33 .where("user.thirdPartyId = :thirdPartyId", { thirdPartyId })

34 .getOne();

35}

Basically, now there is a check when the user is being logged. If the user does not exist we create one, and due to using Google OAuth as login we check it by the google profile Id, thus we added a new method to retrieve a user by thirdPartyId into our User entity.

The last piece we are going to change to make it work is our JwtStrategy. This time, the validate method will do a query in the database to get the real user, check permissions and return.

bloggering/src/auth/strategies/jwt.strategy.ts

16async validate(payload: any) {

17 const user = await User.findOneOrFail(payload.id);

18 //validate token, user claims, etc

19 return user;

20}

Finally! Now we can authenticate with our google login, create a post and retrieve it. We can restart the application as many times as we want and the data will still be there! Go check the database to look at our new post.

Add Configuration Service

After speaking the whole text about take care of sensitive information hardcoded in our code it is time to get rid of them. Not only sensitive information, but also, configurations that can be possible changed by multiple environments, like hosts, ports, secret keys, etc. Most of the readers already know about lots of strategies to deal with it, most of the languages encourage the use of environment variables, others use config files, but the purpose is always the same. Keep your config data out of your code making it possible to change settings according to the environment. NodeJS usually uses .env files for store settings, and NestJS follow the same approach but adds its own infrastructure over the top of process.env to help with modularity and testability of the code. They call it ConfigService and we are going to use it to create our custom configuration files.

- Application Configuration

- Authentication Configuration

- Database Configuration

Clearly this is an overengineer for a small project like this. Usually, you only need to split things in this granularity in big projects, but as I wanted to explore the options, I choose to break it. Feel free to follow the documentation example and load directly the env file into the ConfigService and inject is as Global if you think it is more appropriate for your project. It is up to you to define what is better to YOUR application. First and foremost we need to install NestJs config dependency.

PS C:\gems\blog-projects\bloggering> npm i @nestjs/config --save

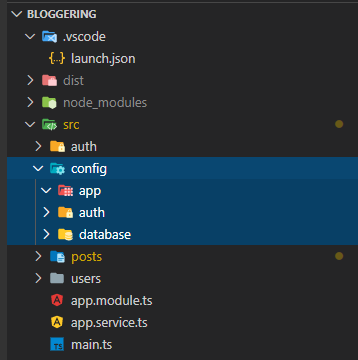

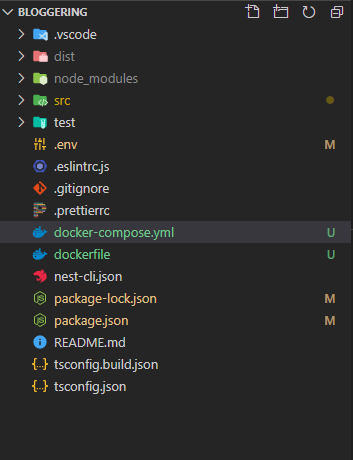

Then, create an /config folder under the /src folder. Then inside the /config folder create one folder for each of the groups we talked before, /app, /auth, and /database

Now in the root of your project create a .env file to hold our configs. This file should not be versioned! Check if it is already ignored in the .gitignore file for safety. Inside the environment file, we can add all of our configs.

APP_ENV=development

APP_NAME=Bloggering

APP_PORT=3000

APP_HOST=http://localhost

GOOGLE_OAUTH2_CLIENTID=<your_client_id>

GOOGLE_OAUTH2_CLIENT_SECRET=<your_client_secret>

OAUTH2_JWT_SECRET=<your_jwt_secret>

TYPEORM_CONNECTION=postgres

TYPEORM_HOST=localhost

TYPEORM_USERNAME=postgres

TYPEORM_PASSWORD=<your_postgres_password>

TYPEORM_DATABASE=bloggering

TYPEORM_PORT=5432

TYPEORM_LOGGING=true

TYPEORM_ENTITIES=dist/**/*.entity.js

TYPEORM_SYNCHRONIZE=true

Of course, feel free to change them according to your environment. In my example, my Postgres is exposed to port 5432, but you can set another port if you wish.

To deal with the general application configuration we are going to create an AppConfigService and expose it via an AppConfigModule

Create three files inside /src/config/app folder: configuration.ts, configuration.service.ts, and configuration.module.ts.

bloggering/src/config/app/configuration.ts

1import { registerAs } from "@nestjs/config";

2export default registerAs("app", () => ({

3 env: process.env.APP_ENV,

4 name: process.env.APP_NAME,

5 host: process.env.APP_HOST,

6 port: process.env.APP_PORT,

7}));

The app/configuration.ts is solely responsible to load the configurations of the env file and create a namespace for them. To do that, we call the factory registerAs from @nestjs/config.

bloggering/src/config/app/configuration.service.ts

1import { Injectable } from "@nestjs/common";

2import { ConfigService } from "@nestjs/config";

3

4@Injectable()

5export class AppConfigService {

6 constructor(private configService: ConfigService) {}

7 get name(): string {

8 return this.configService.get<string>("app.name");

9 }

10 get env(): string {

11 return this.configService.get<string>("app.env");

12 }

13 get host(): string {

14 return this.configService.get<string>("app.host");

15 }

16 get port(): number {

17 return Number(this.configService.get<number>("app.port"));

18 }

19}

The AppConfigService is our actual custom settings service. Our class is basically a wrapper on top of the ConfigService (injected via constructor) and it will be exposed via a module allowing us to do a partial registration of these settings to only specific features if we want.

bloggering/src/config/app/configuration.module.ts

1import { Module } from "@nestjs/common";

2import configuration from "./configuration";

3import { AppConfigService } from "./configuration.service";

4import { ConfigModule, ConfigService } from "@nestjs/config";

5

6@Module({

7 imports: [

8 ConfigModule.forRoot({

9 load: [configuration],

10 }),

11 ],

12 providers: [ConfigService, AppConfigService],

13 exports: [ConfigService, AppConfigService],

14})

15export class AppConfigModule {}

The last piece of infrastructure to the app’s configuration settings is the module.

Through it, we’ll be able to allow our features to import our custom AppConfigService.

To exemplify how to use, let’s remove the hardcoded port in the main.ts file for our port from the env file. But to be able to use it there, make sure to first import the AppConfigModule in our AppModule.

bloggering/src/app.module.ts

1@Module({

2imports: [

3 PostsModule, AuthModule,

4 AppConfigModule, ...],

5...

6})

Finally, we can change our main.ts to set the port based on our settings. Due to be the entry point of the NestJS we cannot inject the AppConfigService on the bootstrap function, but we can use the application to retrieve our custom configuration. Check the code below.

bloggering/src/main.ts

1import { NestFactory } from "@nestjs/core";

2import { AppModule } from "./app.module";

3import { ValidationPipe } from "@nestjs/common";

4import { AppConfigService } from "./config/app/configuration.service";

5

6async function bootstrap() {

7 const app = await NestFactory.create(AppModule);

8 app.useGlobalPipes(new ValidationPipe());

9 app.setGlobalPrefix("api");

10 const appConfig: AppConfigService = app.get("AppConfigService");

11 await app.listen(appConfig.port || 3000);

12}

13bootstrap();

If everything worked as we wish, you should be able to change APP_PORT settings in our .env file and the application should start to listen to this new port.

The database configuration service needs some customization because the OrmConfigService needs to implement the TypeOrmOptionsFactory interface. We need to implement the createTypeOrmOptions that returns a TypeOrmModuleOptions to fulfill the contract that TypeORM expects. Looking at the code makes it easier to understand.

bloggering/src/config/database/configuration.ts

1import { registerAs } from "@nestjs/config";

2const entitiesPath = process.env.TYPEORM_ENTITIES ? `${process.env.TYPEORM_ENTITIES}` : "dist/**/*.entity.js";

3

4export default registerAs("orm", () => ({

5 type: process.env.TYPEORM_CONNECTION,

6 host: process.env.TYPEORM_HOST || "127.0.0.1",

7 username: process.env.TYPEORM_USERNAME,

8 password: process.env.TYPEORM_PASSWORD,

9 database: process.env.TYPEORM_DATABASE,

10 logging: process.env.TYPEORM_LOGGING === "true",

11 sincronize: process.env.TYPEORM_SYNCHRONIZE === "true",

12 port: Number.parseInt(process.env.TYPEORM_PORT, 10),

13 entities: [entitiesPath],

14}));

We added some default values if no settings are provided and some special treatments for arrays, booleans, and numbers. We gave these settings the namespace of “orm".

bloggering/src/config/database/configuration.service.ts

1import { Injectable } from "@nestjs/common";

2import { ConfigService } from "@nestjs/config";

3import { TypeOrmModuleOptions, TypeOrmOptionsFactory } from "@nestjs/typeorm";

4

5@Injectable()

6export class OrmConfigService implements TypeOrmOptionsFactory {

7 constructor(private configService: ConfigService) {}

8 createTypeOrmOptions(): TypeOrmModuleOptions {

9 const type: any = this.configService.get<string>("orm.type");

10 return {

11 type,

12 host: this.configService.get<string>("orm.host"),

13 username: this.configService.get<string>("orm.username"),

14 password: this.configService.get<string>("orm.password"),

15 database: this.configService.get<string>("orm.database"),

16 port: this.configService.get<number>("orm.port"),

17 logging: this.configService.get<boolean>("orm.logging"),

18 entities: this.configService.get<string[]>("orm.entities"),

19 synchronize: this.configService.get<boolean>("orm.sincronize"),

20 };

21 }

22}

Besides that, there is one point that is worth to talk. How to change our hardcoded settings for the new settings in the TypeORM configuration module on app.module.ts.

Currently, we call the method forRoot of the TypeOrmModule:

TypeOrmModule.forRoot({

type: "postgres",

host: "localhost",

port: 5432,

username: "postgres",

password: "<password>",

database: "bloggering",

entities: [Post, User],

synchronize: true,

});

The problem is that method receives static configuration, to remediate, we need to change to call the method forRootAsync which we can provide a TypeOrmOptionsFactory that returns a TypeOrmModuleOptions. That why our OrmConfigService needs to implement this exact interface. Now the TypeORM will know how to get the settings from our custom database config service.

Check the AppModule after the changes.

bloggering/src/app_module.ts

1import { Module } from "@nestjs/common";

2import { AppService } from "./app.service";

3import { PostsModule } from "./posts/posts.module";

4import { AuthModule } from "./auth/auth.module";

5import { TypeOrmModule } from "@nestjs/typeorm";

6import { AppConfigModule } from "./config/app/configuration.module";

7import { OrmConfigModule } from "./config/database/configuration.module";

8import { OrmConfigService } from "./config/database/configuration.service";

9

10@Module({

11 imports: [

12 PostsModule,

13 AuthModule,

14 AppConfigModule,

15 OrmConfigModule,

16 TypeOrmModule.forRootAsync({

17 imports: [OrmConfigModule],

18 useClass: OrmConfigService,

19 }),

20 ],

21 controllers: [],

22 providers: [AppService],

23})

24export class AppModule {}

From now on, I´ll speed up the velocity because, for the rest of the settings, the procedures are basically the same, we are going to create a configuration, a custom config service and a module for OAuth settings and any other “feature” our logic block we want. You can check their content in the bloggering project repository on GitHub. Do not forget to change the places where we used these hardcoded settings to inject the corresponding config service. Of course, as I said in the beginning of the section, this is a worth strategy only for big projects, for small projects you can just call to load your env file automatically. You can even set it global and inject all over the places.

@Module({

imports: [ConfigModule.forRoot()],

})

Finally, just run the application and check if everything is still working fine. We are ready for the next part and docker and docker-compose to our stack!

Add Docker and Docker Compose

In this section, we are going to configure our application to run via Docker, and also configure the docker-compose to automatically get the images of the Postgres and later of the elastic search and then run everything together without install anything manually.

If you already know what docker is, or its purpose you can skip the next paragraph and go to the real action, otherwise, I tried to write a brief introduction about it.

Until now, we are starting our application on our local machine and accessing the database installed on localhost. But what if you want to execute it in another machine? For instance, your notebook? Or other members of your team wants to run the application as well? You/He is going to execute all the steps manually, install node, install dependencies, install, and run the database in your machine. You can create scripting for everything, even so, is tedious and susceptible to bugs, the script can be wrong, the environment can have differences, OS, libs, database version, etc. Not only is a possible problem for development, but also when you want to deploy your application. You develop everything in your machine, works fine. Then, you have ship your code to production and boom! A completely different environment there, and you, of course, wonder what the heck happened? In my machine, it worked like a charm! In conclusion, these are the kinds of problems we can solve with containers. And there comes Docker to rescue. Using it, we can pack, ship, and run any application as a lightweight, portable, self-sufficient container, which can run virtually anywhere. Creating CI/CD pipelines will be much more easier because with this self container you have predictable, and isolation of your application.

First and foremost, install the docker for your OS. Just a quick search in google and you will find the link. This install docker for windows, go to https://docs.docker.com/docker-for-windows/install/.

While I use windows as OS, it is important to note that I am running docker with Linux Containers!

First, create a dockerfile in the root of the application.

1FROM node:12.13-alpine As development

2ENV NODE_ENV=development

3WORKDIR /usr/src/app

4COPY package*.json ./

5RUN npm install --only=development

6COPY . .

7RUN npm run build

8

9FROM node:12.13-alpine as production

10ARG NODE_ENV=production

11ENV NODE_ENV=${NODE_ENV}

12WORKDIR /usr/src/app

13COPY package*.json ./

14RUN npm install --only=production

15COPY . .

16COPY --from=development /usr/src/app/dist ./dist

17CMD ["node", "dist/main"];

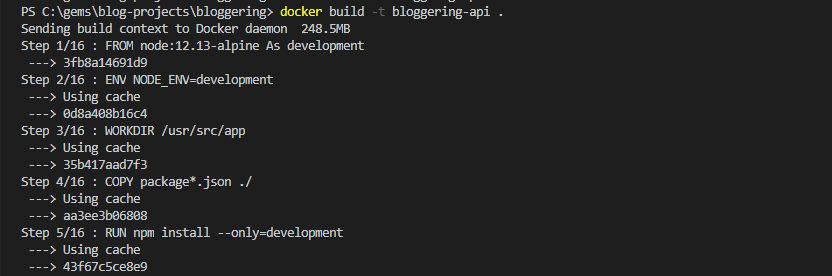

This is a multi stage build file, with a stage we called development and other called production. In a multi-stage build like that, we will have a much lightweight image as a final output.

The development step is used to generate our javascript files in the /dist folder. If you have no clue about what I’m talking about I suggest you google about typescript. But long story short, typescript is a superset of javascript that gives you incredible help to develop, but in the end, it needs to be compiled to plain javascript. What NestJs build (npm run build) does under the hoods, is to call a typescript compiler to compile the files to javascript. You can check here.

- Development step - The

FROMcommand is the beginning of a stage. We specified an image to be used and we give a name to that step. This image will be used until our nextFROMcommand which will result in another step with another image. - TheWORKDIRcommand sets the working directory for the subsequent commands COPY, RUN, and CMD. The firstCOPYcopies ourpackage.jsonandpackage.lock.jsonto our workdir, then we runnpm installwith the flag to install only dev dependencies. The nextCOPYcopies the rest of the application files to our workdir in our container. Finally, we call theRUNcommand to runnpm run buildand build our application generating our/distfolder. - Production step

- The next

FROMcreates another stage using a fresh new image with a different name. Both of the stages have no connections between them. - The next instructions are very similar to the previous stage, with some differences. When we run

npm installwe use the--only=prodflag to not install dev dependencies. - We have one more

COPYcommand to copy from thedevelopmentstage the/distcontent. And finally, theCMDset the default command to execute when the image is run.

- The next

With the dockerfile created we are ready to build our image running the command above.

PS C:\gems\blog-projects\bloggering> docker build -t bloggering-api .

The -t means a tag, we give the name bloggering-api.

You can check if the image was created with the command docker images in your terminal.

Now we can run our container. Make sure to use the same tag you used to build the image.

docker run bloggering-api

But wait, there is a problem, we are running our application in a container, if you have a local database you will not have access by default. To overcome this, you have some solutions that you can see here, but well… we are not going to use any of them. Instead, we will use a tool called Docker Compose that comes with docker.

Docker Compose allows us to define and run more than one container, set their configurations and dependencies between them. Imagine that you have a big application with multiple microservices, a database, a message queue, and any other service you could imagine. Instead of start each one of them manually, we just configure our docker-compose to run one container for each of our services and which depends on which one and voilá! With a single magical command, we start all of them.

Let’s see it in practice.

Similarly, as we did before with dockerfile, now create a docker-compose.yml file in the root of our application.

docker-compose.yml

version: '3.7'

services:

postgres:

container_name: ${TYPEORM_HOST}

image: postgres:12-alpine

networks:

- blognetwork

env_file:

- .env

environment:

POSTGRES_PASSWORD: ${TYPEORM_PASSWORD}

POSTGRES_USER: ${TYPEORM_USERNAME}

POSTGRES_DB: ${TYPEORM_DATABASE}

PG_DATA: /var/lib/postgresql/data

ports:

- ${TYPEORM_PORT}:${TYPEORM_PORT}

volumes:

- pgdata:/var/lib/postgresql/data

main:

container_name: bloggering-api

restart: unless-stopped

build:

context: .

target: development

command: npm run start:dev

ports:

- ${APP_PORT}:${APP_PORT}

volumes:

- .:/usr/src/app

- /usr/src/app/node_modules

env_file:

- .env

networks:

- blognetwork

depends_on:

- postgres

networks:

blognetwork:

volumes:

pgdata:

A quick look on this file should give you a clue about what is happening here. We created two services (will have two separate containers in our application), one for the Postgres database and the other for our API.

The first service is our database, Postgres. We specified the container name to be the same as our TYPEORM*HOST. We do this because instead of accessing the localhost now, we are accessing this database in a container from another container in the same network. And by default, the database host is the container_name. Then we set the image, the same-named network we will set to the main service and the file we have our environment variables. In the environment section, we set pre-defined Postgres’s variables. You can check the complete list in the Postgres Docker image documentation.

The two last configurations is about the ports (Host_Port:Container_Port) we want to expose and the named volume we will set. Volumes are the way we can persist our data even when recreating an image for example.

A database with the name of the POSTGRES_DB will be created for the first time the image is first started. There is also a possibility to create scripts initialization scripts using a folder called /docker-entrypoint-initdb.d. See more details in the documentation link provided above.

The main service has the container name set as bloggering-api. The build section we defined the context which tells Docker which files should be sent to the Docker daemon. In our case, that’s our whole application, and so we pass in ., which means all of the current directory. The target will match our stage in the dockerfile and made docker ignore the production stage.

The command instruction set what command we will run in the container start, because it is a development environment, we will run it with the watch flag to allow reload the application every time something changes. The volumes part has some tricks, because we want to docker to reflect any change we make in our files we mount our host current directory into the Docker container. Due to this volume, we can have our changes reflected in our application. Keeping it in mind, we have to take care of our node_modules do not override the container node_modules. In order to avoid that, we mount an anonymous volume to avoid that behavior.

All the rest is self-explanatory, we set the environment file, the network (same as the Postgres service), and we tell docker compose that this service depends on the database service. So Docker Compose will try to start first our Postgres service, and then, our bloggering_api service.

If you already configure your databases settings, do not forget to change TYPEORM_HOST to other values than localhost.

Now we can run the command to run docker-compose.

PS C:\gems\blog-projects\bloggering> docker-compose up

Docker compose will start both our servers, in the correct order.

When you need to install new dependencies via npm or yarn you´ll have to run the following command.

PS C:\gems\blog-projects\bloggering> docker-compose up –build -V

Remember when we created our docker-compose.yml file, we set an anonymous volume to our node_modules in our API to avoid it to be overridden. The -build flag triggers an npm install and the -V will remove this anonymous volume and recreated it.

However, you may notice that because our application now runs via docker-compose and we call npm run start:dev we cannot debug it anymore. In order to fix that problem, let’s change some pieces.

I´ll put here the steps to debug in VSCODE the IDE that I use.

Change the docker-compose.yml file to run npm run start:debug instead also let’s add the default VSCode debugger port when using the --inspect flag, 9229.

docker-compose.yml

services:

...

main:

...

command: npm run start:debug

ports:

- ${APP_PORT}:${APP_PORT}

- 9229:9229

...

In your package.json file change the debug inside the scripts.

package.json

"start:debug": "nest start --debug 0.0.0.0:9229 --watch",

And finally, replace our .vscode/launch.json with the below content.

{

"version": "0.2.0",

"configurations": [

{

"type": "node",

"request": "attach",

"name": "Debug: Bloggering",

"remoteRoot": "/usr/src/app",

"localRoot": "${workspaceFolder}",

"protocol": "inspector",

"port": 9229,

"restart": true,

"address": "0.0.0.0",

"skipFiles": ["<node_internals>/**"]

}

]

}

After that changes, put a breakpoint in your code, run docker-compose up command and try to attach the debugger to see if it works.

Hopefully, everything worked fine and now we have our environment all set working in containers! Yeah!

Let’s move on to the next and final step of our backend project in NestJS!

Add Elastic Search

In short, elasticsearch is a REST HTTP service that wraps around Apache Lucene adding scalability and making it distributed. Lucene is a powerful Java-based indexing and search technology. Its use cases vary from indexing millions of log entries to indexing e-commerce content, blogs, streaming data, show related content, and many others. For this example, we are going to use it to index our posts (title, content, author) and provide endpoints to do quick search and get related content.

First of all, make a change in the docker-compose.yml to add our new elasticsearch service. Because we are using it just for educational purposes, the configuration will be very simple, with just one node. It is out of the scope of that article to show how to configure elasticsearch properly in a production environment.

In addition to this, we need to add to more settings to our .env file.

Add environments variables to set elasticsearch host and port:

...

ELASTIC_SEARCH_HOST=es01

ELASTIC_SEARCH_PORT=9200

...

docker-compose.yml

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.7.0

container_name: ${ELASTIC_SEARCH_HOST}

environment:

- bootstrap.memory_lock=true

- discovery.type=single-node

- "ES_JAVA_OPTS=-Xms2g -Xmx2g"

- cluster.routing.allocation.disk.threshold_enabled=false

env_file:

- .env

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- esdata01:/var/lib/elasticsearch/data

ports:

- 9200:${ELASTIC_SEARCH_PORT}

networks:

- blognetwork

And then, update the main service: bloggering-api to depends on our new elasticsearch service.

main:

container_name: bloggering-api

...

...

depends_on:

- postgres

- elasticsearch

Install the NestJs Elasticsearch module and the elasticsearch.

PS C:\gems\blog-projects\bloggering> npm i @elastic/elasticsearch --save

PS C:\gems\blog-projects\bloggering> npm i @nestjs/elasticsearch --save

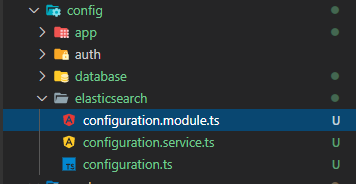

Because we already have our configuration pattern defined using custom configuration files, let’s do the same for the elasticsearch, if you have skipped this custom configuration feel free to skip this part and do whatever you did before. Create a folder called elasticsearch inside our config folder, inside the folder create our 3 basic files, configuration.ts, configuration.service.ts, configuration.module.ts. I won´t enter in detail because we already looked into this part on the Configuration section.

The structure should look like:

bloggering/src/config/configuration.module.ts

import { registerAs } from "@nestjs/config";

export default registerAs("es", () => ({

host: process.env.ELASTIC_SEARCH_HOST || "127.0.0.1",

port: process.env.ELASTIC_SEARCH_PORT || "9200",

}));

bloggering/src/config/configuration.service.ts

1import { Injectable } from "@nestjs/common";

2import { ConfigService } from "@nestjs/config";

3import { ElasticsearchOptionsFactory, ElasticsearchModuleOptions } from "@nestjs/elasticsearch";

4

5@Injectable()

6export class ElasticsearchConfigService implements ElasticsearchOptionsFactory {

7 constructor(private configService: ConfigService) {}

8

9 createElasticsearchOptions(): ElasticsearchModuleOptions {

10 const node = `http://${this.configService.get<string>("es.host")}:${this.configService.get<string>("es.port")}`;

11 return { node };

12 }

13}

Likewise the TypeORM configuration, the elasticsearch module also accepts a specific interface that has a method that returns

ElasticsearchModuleOptions.

bloggering/src/config/configuration.module.ts

1import { Module } from "@nestjs/common";

2import configuration from "./configuration";

3import { ElasticsearchConfigService } from "./configuration.service";

4import { ConfigModule, ConfigService } from "@nestjs/config";

5

6@Module({

7 imports: [

8 ConfigModule.forRoot({

9 load: [configuration],

10 }),

11 ],

12 providers: [ConfigService, ElasticsearchConfigService],

13 exports: [ConfigService, ElasticsearchConfigService],

14})

15export class ElasticsearchConfigModule {}

Creating a subscriber

A subscriber is an object that can be attached to the TypeORM pipeline and listen to certain events in an entity. This object should implement an interface called EntitySubscriberInterface. Common events including beforeInsert, afterInsert, beforeUpdate, afterUpdate, and others can be implemented.

Subscribers sometimes overlaps with another feature from TypeORM called @Listeneres, where you can decorate custom methods in the entity itself to listen for specific events as well.

Although they look similar, they can have slightly different purposes and different limitations, like we cannot inject dependencies into a @Listener method, and we cannot conditionally subscribe or not like it is possible when using subscribers.

| Subscribers | | —————————————————————— | | | (+) Can be subscribed/or listen dynamically | | (+) Can inject dependencies | | (+) Can listen to all entities | | (-) More complex, need to be subscribed and registered as provider |

| Listeners | | ———————————————————– | | | (+) Easy to implement | | (+) Can use the own data of the entity, increasing cohesion | | (-) Can’t be applied dynamically | | (-) Can’t have injected dependencies |

The documentation instructs to add subscribers to the TypeORM configuration as a Glob pattern, but we are not going to do that. Letting TypeORM take care of it makes it impossible for us to use dependency injection into our subscriber, that’s why instead, we´ll subscribe it manually on the constructor of the post.subscriber to make NestJS take care of the injection. It is a bit hacky to me, but it is the only way I found to inject dependencies right now in the subscriber. You can check discussions here and here.

Create the post.subrscriber.ts file under the /posts folder.

bloggering/src/posts/post.subscriber.ts

1import { EventSubscriber, EntitySubscriberInterface, Connection, InsertEvent, UpdateEvent } from "typeorm";

2import { Post } from "./post.entity";

3import { ElasticsearchService } from "@nestjs/elasticsearch";

4

5@EventSubscriber()

6export class PostSubscriber implements EntitySubscriberInterface<Post> {

7 constructor(private readonly connection: Connection, private readonly elasticSearchService: ElasticsearchService) {

8 connection.subscribers.push(this);

9 }

10

11 listenTo() {

12 return Post;

13 }

14

15 async afterInsert(event: InsertEvent<Post>) {

16 await this.createOrUpdateIndexForPost(event.entity);

17 }

18

19 afterUpdate(event: UpdateEvent<Post>) {

20 console.log(`After Post Updated: `, event);

21 }

22

23 async createOrUpdateIndexForPost(post: Post) {

24 const indexName = Post.indexName();

25 try {

26 await this.elasticSearchService.index({

27 index: indexName,

28 id: post.id,

29 body: post.toIndex(),

30 });

31 } catch (error) {

32 console.log(error);

33 }

34 }

35}

It is important to implement the method ListenTo, otherwise the subscriber will listen to all entities configured in the TypeORM configuration. The method afterInsert will be fired after a Post insert/save and will receive the entity just inserted. Then, we call the method createOrUpdateIndexForPost which is the responsible to call elasticsearch and index our post information. The name of the index will be get from a post entity helper method as well as the body of the document. (documents can be would be like the rows in relational databases).

Notice that our index post will be created after the first call if it does not exist.

Under the hoods, all the elasticseach client do for us, is call REST endpoints of the elasticsearch server. You can call them manually for tests if you want. For example, after build the elasticsearch image try to execute a curl command in the docker container to check if elasticsearch is running.

docker exec -it es01 curl http://localhost:9200

So far so good, now add the helpers methods to the Post entity. In the end of the file add the methods indexName() and toIndex().

bloggering/src/posts/post.entity.ts

1static indexName() {

2 return Post.name.toLowerCase();

3}

4

5toIndex() {

6 return {

7 id: this.id,

8 author: this.author.name,

9 title: this.title,

10 content: this.content

11}

In our PostModule add the elasticsearch configuration and the PostSubscriber as a provider.

bloggering/src/posts/post.entity.ts

1import { Module } from "@nestjs/common";

2import { PostsController } from "./posts.controller";

3import { PostsService } from "./posts.service";

4import { ElasticsearchModule } from "@nestjs/elasticsearch";

5import { ElasticsearchConfigService } from "src/config/elasticsearch/configuration.service";

6import { ElasticsearchConfigModule } from "src/config/elasticsearch/configuration.module";

7import { PostSubscriber } from "./posts.subscriber";

8

9@Module({

10 controllers: [PostsController],

11 providers: [PostsService, PostSubscriber],

12 imports: [

13 ElasticsearchModule.registerAsync({

14 imports: [ElasticsearchConfigModule],

15 useClass: ElasticsearchConfigService,

16 }),

17 ],

18})

19export class PostsModule {}

From now on, the index part should be working. Try to run the docker-compose to check if it works. In case some problems happen, try to look at NestJs log and debug the application if needed. Double-check the docker-compose.yml configuration and environment file. Make sure you are not using localhost or the wrong port. Call elasticsearch manually to check if it is working.

docker exec -it es01 curl http://localhost:9200

In case everything worked fine, you can execute the commands above to check the result:

docker exec -it es01 curl http://localhost:9200/post/

docker exec -it es01 curl http://localhost:9200/post/_search

The first one should return to us the schema of our document post, and the second the result of a search.

By the way, the search will be the last piece of code we are going to implement. The Bloggering application will have one endpoint to ultra fast search by keywords and other to get related content.

Create a method search in the PostsService class.

bloggering/src/posts/post.service.ts

1import { Injectable, ForbiddenException } from "@nestjs/common";

2import { Post } from "./post.entity";

3import { User } from "src/users/user.entity";

4import { ElasticsearchService } from "@nestjs/elasticsearch";

5

6@Injectable()

7export class PostsService {

8 constructor(private readonly elasticSearchService: ElasticsearchService) {}

9

10 async search(options: any): Promise<Post> {

11 const { body } = await this.elasticSearchService.search({

12 index: Post.indexName(),

13 body: {

14 query: {

15 // eslint-disable-next-line @typescript-eslint/camelcase

16 multi_match: {

17 query: options.query,

18 fields: ["title^2", "content", "author"],

19 },

20 },

21 },

22 });

23 return body.hits;

24 }

25

26 async createPost(title: string, author: User, content: string): Promise<any> {

27 if (!author.isActive) throw new ForbiddenException("Cannot create a post because the user is inactive");

28 const insertedPost = await Post.save(new Post(author, title, content));

29 return insertedPost.id;

30 }

31 getPosts = async (): Promise<Post[]> => await Post.find();

32 getSinglePost = async (id: string): Promise<Post> => await Post.findOneOrFail(id);

33}

Our new search method receives an argument options that contains a property called query with the keyword searched by the client. Then, calls elasticSearchService.search passing as arguments, the name of the index, and an object containing the expression searched by the user (options.query) with an array of fields where we´ll make the search. Notice that we can use the expression ^ to increase the rate of a specific field. In my case, the title has its weight increase so it will have a bigger impact on the query.

Different from other databases, elasticsearch has the possibility of instead have a binary return (found, not found), return a score for the results, the higher the score, the more likely to be a relevant result.

Time to expose our search via an endpoint in our application. After that, our clients will be able to query for posts in a quick way!

Just add a search method with a specific route in the post.controller.ts

bloggering/src/posts/post.controller.ts

1import { Post as BlogPost } from "./post.entity";

2import { PostsService } from "./posts.service";

3import { Controller, Get, Param, Post, Body, UseGuards, Req, Query } from "@nestjs/common";

4import { AuthGuard } from "@nestjs/passport/dist";

5

6@Controller("posts")

7export class PostsController {

8 constructor(private readonly postsService: PostsService) {}

9

10 @Get()

11 async findAll(): Promise<BlogPost[]> {

12 return await this.postsService.getPosts();

13 }

14

15 @Get("search")

16 async search(@Query("q") content): Promise<BlogPost> {

17 return this.postsService.search({ query: content });

18 }

19

20 @Get(":id")

21 async find(@Param("id") postId: string): Promise<BlogPost> {

22 return await this.postsService.getSinglePost(postId);

23 }

24

25 @Post()

26 @UseGuards(AuthGuard("jwt"))

27 async create(@Body() newPost: BlogPost, @Req() req) {

28 const user = req["user"];

29 const newPostId = await this.postsService.createPost(newPost.title, user, newPost.content);

30 return { id: newPostId };

31 }

32}

Any help/suggestions/feedback contact me on @joaopozo or via email joaopozo@gmail.com ;)

References:

https://www.elastic.co/guide/en/elastic-stack-get-started/current/get-started-docker.html

https://www.elastic.co/guide/en/elasticsearch/reference/current/docs.html

https://www.elastic.co/guide/en/elasticsearch/client/javascript-api/current/api-reference.html

https://blog.logrocket.com/containerized-development-nestjs-docker/